Yesterday Yiyang and I presented Birdveillance for our final Physical Computing class. We’re proud of our work, but feel like we still have more work to do on this project, and hope we’ll get a chance to develop it for the winter show.

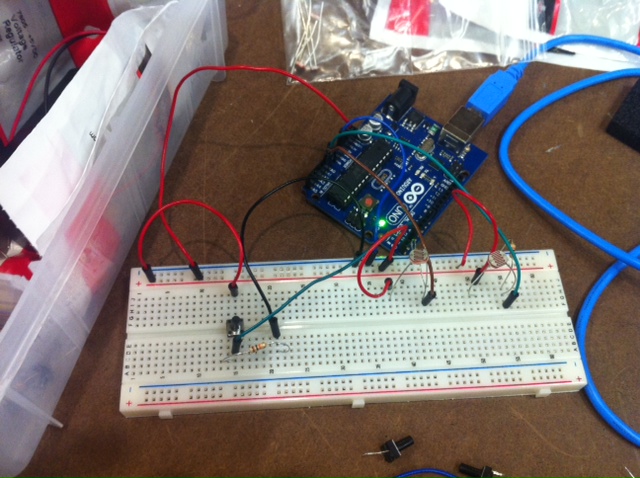

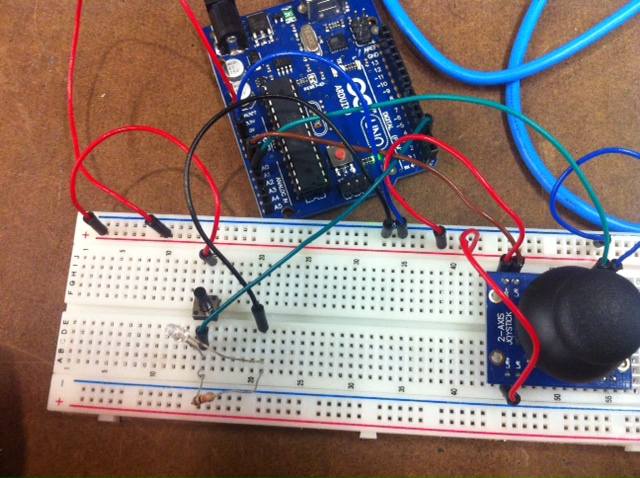

TO DO LIST:

** install flash in head, tweak head & eyes

** felt neck & tube

** will email work or do we need to switch to HTTP POST JSON API?

** beak

– figure out Speech to Text

– Beak

– eyes

– sew wings

– wings — motors?– upload photos somewhere else to get around twitter photo upload limits

– feet?

– responds to sound threshold (goes to sleep if below threshold)

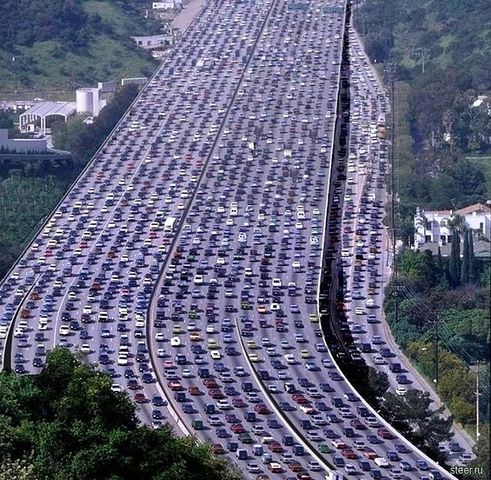

– slower motion to catch the faces

– clearer eye / photo signal (add a flash from a camera)

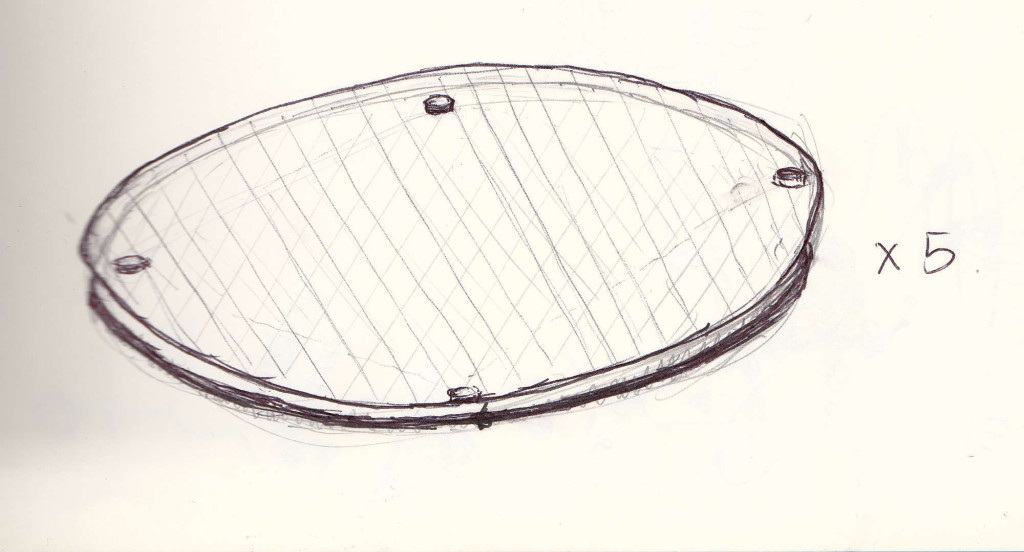

– bird stand: box, label with twitter handle